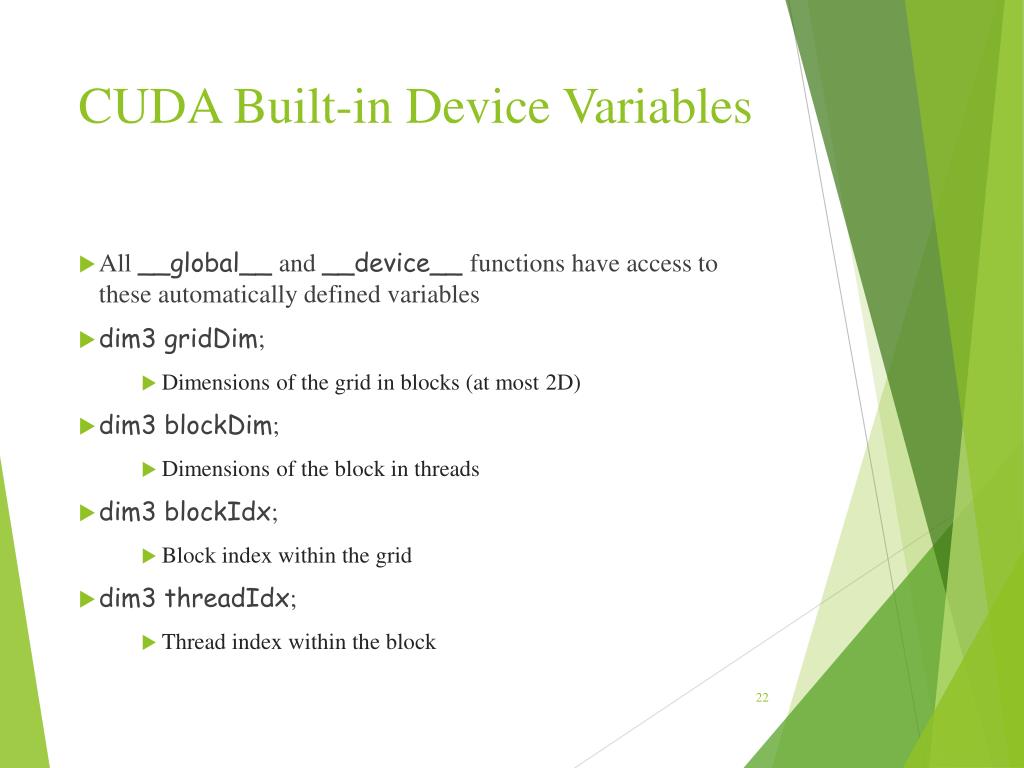

dim3 blockDim // This variable describes number of threads in the. If you have dim3 threads(tx,ty,tz) you have the following rules tx tytz<=1024, ty<=1024,ty<=1024,tz<=64. dim3 gridDim // This variable describes number of blocks in the grid in each dimension. YOu could use for example dim3 threads(32,32). dim3 is a CUDA Fortran provided data type that has 3 dimensions, in this case we are dealing with a one dimensional block and grid so we specify a dimensionality of 1 for the other two dimensions. If you use dim3 threads(1024,1024) the kernels will not be executed. The total number of thread should 1024 totally, for the numbers of blocks is ok what you did.

However, If I’m guessing correctly, I cannot work with something like this: dim3 blocks(65535,65535) īecause I have a maximum of 1024 threads per block, and I’m actually requesting 1024 per block in each dimension (giving 1024x1024 max threads), is this correct? I know that I can “confortably” work with this configs: dim3 blocks(65535) Maximum sizes of each dimension of a grid: 65535 x 65535 x 65535 Maximum sizes of each dimension of a block: 1024 x 1024 x 64 In the example above, each block consists of 16 16 256 threads and the grid consists of 10 10 10 1000 blocks.

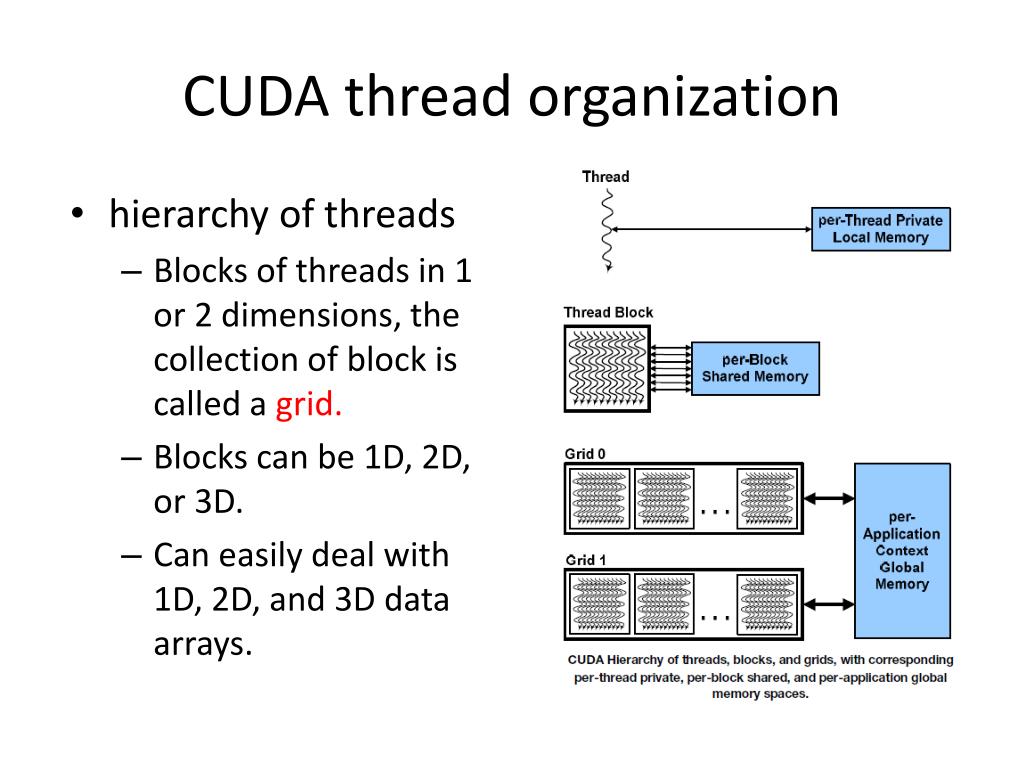

Esses coletores são especialmente desensenvolvido para permitirem high-flow dos gases resultantes da queima de combustível. The dimensions of a block and the grid are specified at kernel launch time: dim3 blockDim(16, 16) dim3 gridDim(10, 10, 10) mykernel<<Além das peças especificas para o swap, a Schumacher Creative Services, fabrica coletores de escape Tri-Y, que são o foco dessa postagem. Maximum number of threads per block: 1024 Small Block Hi-Flow Tri-Y Under Chassis Headers. Threads are given access to their position as well as the grid and block layout through four built-in variables: threadIdx, blockIdx, blockDim and gridDim.The output from the deviceQuery from the CUDA samples, shows me I have this max sizes: Maximum number of threads per multiprocessor: 1536 CUDA will then launch and manage 256 * 1000 = 256,000 threads for us. In the example above, each block consists of 16 * 16 = 256 threads and the grid consists of 10 * 10 * 10 = 1000 blocks. Grids and blocks can be one-, two- or three-dimensional. Then, we would have had 1D blocks within a 2D grid dim3 dimBlock(ceil(W/256.0). The dimensions of a block and the grid are specified at kernel launch time: dim3 blockDim(16, 16) Suppose we had fixed the number of threads in a block to be 256 in 1 dimension. Threads are organized into blocks which are themselves organized into a grid. The basic unit of execution in CUDA is the thread.

#CUDA BLOCK DIMENSIONS DIM3 CODE#

When a kernel is run for the first time, the CUDA runtime compiles it to its machine code appropriate for the specific GPU and transfers the program onto the device. The dimension of the thread block is accessible within the kernel through the built-in blockDim variable.

Functions either marked _host_ or unmarked execute on the host and are only callable from the host.Ĭode run on the device is compiled into an intermediate bytecode format. > > > syntax can be of type int or dim3. It only needs to add one parameter in three dimensions: dim3 block(2,2,2) That's it. Functions marked _device_ execute on the device and are only callable from the device. When these three parameters are one -dimensional When you need to define the two -dimensional and above grid, block and thread, you only need to use it in CUDA Cdim3Keywords, such as dim3 block(2,2) It is a thread block that defines a 2 \ Times 2. Functions marked _global_ execute on the device and are callable from both the host, and on newer GPUs (compute capability 3.x+), the device as well. In CUDA programs, functions specify both on which device they are run and on which devices they are callable from. Device ExecutionĬUDA differentiates between the host, the computer's CPU and main memory and device, the GPU. Note:Lecture 5's scope is too large to cover in an article of a reasonable length, so we're going to cover a subset of its topics: how the abstractions provided by CUDA map down to its implementation on graphics hardware.

0 kommentar(er)

0 kommentar(er)